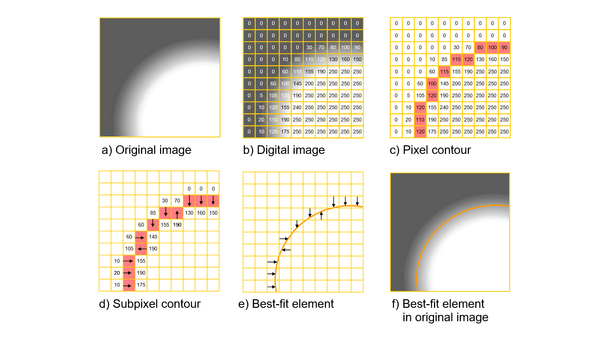

Image processing is part of the basic equipment of most optical and multisensor coordinate measuring machines due to its flexible application options and the good visualisation of the object and the measured features. Similar to image generation in visual measurement with measuring microscopes, the measuring object is imaged through a lens onto a matrix camera in the simplified manner shown in Figure 7. The camera electronics convert the optical signals into a digital image, which is used to calculate the measurement points in an evaluation computer with corresponding image processing software. The intensity distribution in the image is analysed. The individual components such as lighting systems, imaging optics, semiconductor camera, signal processing electronics and image processing algorithms have a decisive influence on the performance of image processing sensors[1, 4].

- Development

-

Machines

- Coordinate measuring machines with X-Y table

- Coordinate measuring machines with guideways in a single plane

- Coordinate measuring machines with bridge

- Coordinate measuring machines with rotary axes

- Coordinate measuring machines for two-dimensional measurements

- Coordinate measuring machines with X-ray tomography

- Coordinate measuring machines for special applications

- Sensors

- Software

- Special characteristics

- Accuracy

- Publications

- Literature page